https://googledatalab.github.io/pydatalab/datalab.bigquery.html

Prerequisite

The vpx_vm table must be uploaded to BigQuery Dataset already. Otherwise, the code will throw an exception "Exception: notFound: Not found: Table .. . .. not found in location ..

"

Performing a Select Count(*) on vpx_vm table.

#Template

import google.datalab.bigquery as bq

# query

bq_vm = bq.Query('SELECT count(*) FROM EDA.vpx_vm')

output_opt = bq.QueryOutput.table(use_cache=False)

#executing the query statement

result = bq_vm.execute().result()

##Print result set

raw = bq_vm.execute(output_options=output_opt).result()

source_df = raw.to_dataframe()

print (source_df)

#Print result set all in a line.

resultset = bq_vm.execute(output_options=output_opt).result().to_dataframe()

print (resultset) f0_

0 4258

f0_

0 4258

Build upon from the template.

import google.datalab.bigquery as bq

bq_vm = bq.Query('SELECT * FROM EDA.vpx_vm')

output_opt = bq.QueryOutput.table(use_cache=False)

raw = bq_vm.execute(output_options=output_opt).result()

source_df = raw.to_dataframe()

print('Column Data type ')

source_df.dtypes

Perform select all on the table.

import google.datalab.bigquery as bq

bq_vm = bq.Query('SELECT * FROM EDA.vpx_vm')

## simply printing the statement out.

print (bq_vm)

print ('\n')

output_opt = bq.QueryOutput.table(use_cache=False)

#print (output_opt)

#result = bq_vm.execute().result()

#print (result)

##Print result set

raw = bq_vm.execute(output_options=output_opt).result()

source_df = raw.to_dataframe()

print (source_df)

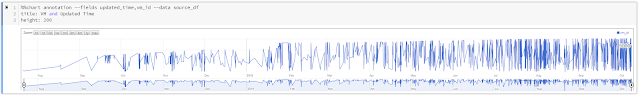

BigQuery Query - SELECT * FROM EDA.vpx_vm

vm_id ds_id updated_time

0 27 4098 2018-08-21 22:05:03.437

1 213 4098 2018-08-21 22:05:04.547

2 730 4098 2018-08-21 22:05:05.440

3 799 4098 2018-08-21 22:05:02.933

4 907 4098 2018-08-21 22:05:05.123

5 1006 4098 2018-08-21 22:05:06.153

6 1018 4098 2018-08-21 22:05:05.367

7 1019 4098 2018-08-21 22:05:04.040

8 1022 4098 2018-08-21 22:05:03.937

9 1041 4098 2018-08-22 18:02:38.317

10 1059 4098 2018-08-21 22:05:06.230

11 1162 4098 2018-08-21 22:05:06.063Using

source_df.head() to get the following results.

In comparison, BigQuery is a lot more natural than Panda where the Python Panda API is heavily used. Database operation can easily fit into the BigQuery.Query API. Both are easily integrate with GCP Chart. Basically, the charting can be done with BigQuery, Python BigQuery API or Panda.

BigQuery

%%bq query --name host_resource_by_ip_sum_data

select ip_address, sum(cpu_hz) as SUM_CPU, sum(mem_size) as SUM_MEMORY from EDA.vpx_host group by ip_address

Python BigQuery

#Template

import google.datalab.bigquery as bq

# query

bq_vm = bq.Query('SELECT count(*) FROM EDA.vpx_vm')

output_opt = bq.QueryOutput.table(use_cache=False)Python Panda

import pandas as pd

from StringIO import StringIO

#create dataframe

vm_df = pd.read_csv(StringIO(vpx_vm_data))

date_summary=vm_df.groupby(['updated_time'],as_index=False)['vm_id'].sum()

print(date_summary.head())

date_summary['updated_time'] = pd.to_datetime(date_summary['updated_time'])

date_summary.dtypes

No comments:

Post a Comment